Hey, so you’ve probably noticed—healthcare is changing fast.

And honestly? AI is right at the center of it. It’s not just hype anymore.

Hospitals and clinics are already using AI models to help with diagnosis, treatment planning, and even paperwork.

Think of these models like super-smart tools.

They can go through tons of patient data in seconds, spot patterns, and even predict when a patient might crash—hours before it’s obvious to humans.

One AI tool actually helped detect 20% more breast cancer cases without increasing false alarms.

That’s huge.

And it’s not just about accuracy—AI is helping save serious money too.

Early diagnosis = lower treatment costs.

Smarter resource use = fewer readmissions and less staff burnout.

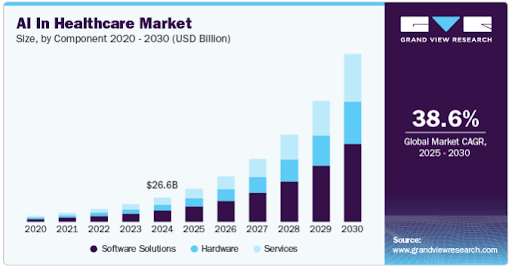

No wonder the AI in healthcare market is exploding—$26.69B in 2024, and could hit over $100B by 2028.

If you’re thinking about implementing AI, now’s honestly the best time to start exploring.

What Are AI Models in Healthcare?

Let’s break it down in simple terms.

AI models in healthcare are smart systems that help computers think a bit like humans. They learn, make decisions, and solve problems — just like a doctor would — but with way more data and speed.

At the heart of every AI model, there are four main things:

- Algorithms (how it thinks),

- Data (what it learns from),

- Training (how it learns) & Inference (how it makes decisions after learning).

AI uses tech like Machine Learning, Deep Learning, NLP, Generative AI, and more. Each one helps solve different problems — like reading X-rays, predicting patient risk, or even summarizing clinical notes.

Now, compared to traditional software (which just follows set rules), AI learns from data and keeps improving. That’s what makes it powerful.

Most AI models today are task-specific (like diagnosing a condition). But newer models, like LLMs and GenAI, are starting to do multiple tasks — like helping doctors write better reports and giving personalized advice to patients.

Top Use Cases of AI Models in Healthcare

Let’s look at some of the most powerful and practical ways AI is making a real difference:

1. Smarter Clinical Decisions

AI models now help doctors make better decisions by analyzing patient records, lab results, and research.

Advanced AI tools (like large language models) can scan huge amounts of medical data and suggest what to look out for or do next. It’s like having an extra pair of expert eyes—especially useful for complex cases.

2. Better Imaging & Radiology

AI is great at reading medical images.

Whether it’s X-rays, MRIs, or ultrasounds, AI can catch tiny issues early—like lung nodules or signs of diabetic eye disease. It helps radiologists work faster and generate reports automatically.

3. Predicting Patient Risks

AI can look at patient history and current data to predict future health issues.

For example, it can flag patients at risk of heart failure, sepsis, or chronic diseases like diabetes—sometimes weeks or months in advance.

4. Faster Diagnostics (like ECGs & Skin Conditions)

AI can quickly read ECGs or skin images and detect issues just like an experienced doctor—sometimes even faster.

It also helps spot early signs of neurological disorders by analyzing brain scans and related data.

5. Automating Clinical Documentation

Generative AI can now write. It listens to doctor-patient conversations, summarizes notes, and drafts reports.

Tools like ambient AI reduce paperwork and give doctors more time with patients.

6. AI Chatbots & Virtual Assistants

Need to check symptoms or book an appointment? AI-powered assistants can do that.

These tools talk like humans and guide patients with reminders, health info, and support—often through mobile apps or wearables.

7. Personalized Treatment Plans

AI can suggest custom treatment plans based on a patient’s genetics, history, or wearable data.

It can even adjust drug doses or predict how someone might respond to cancer treatment. The result? More effective care with fewer side effects.

Real-World Examples & Successful Deployments of AI Models

What You Must Know Before Adopting AI in Healthcare

Adopting AI in healthcare is not just about the tech. It’s about doing it right—ethically, legally, and practically.

1. Protecting patient data is non-negotiable

AI needs a lot of sensitive health data to work well. That means you must follow strict privacy laws like HIPAA (US), PHIPA (Ontario), PIPEDA (Canada), and GDPR (EU).

In 2025, simply saying you’re compliant isn’t enough—you’ll need to prove it. Think real-time monitoring, regular audits, and strong cloud security from day one.

2. Bias in AI is real—and risky

If your AI is trained on biased data, it could make unfair decisions.

That’s dangerous in healthcare. Startups need to train models on diverse, representative data and show that the AI works fairly for everyone.

Tools like SHAP or LIME can help explain how the AI thinks. This builds trust with both clinicians and patients.

3. Integration is often a pain point

Many hospitals use outdated EHR/EMR systems. Your AI must work smoothly with them. If it doesn’t fit into their daily workflow, it won’t be used.

Plan for integration early. Use standards like FHIR. And design your product to feel like a natural part of the provider’s workflow—not a disruption.

4. Explainability builds clinical trust

Doctors won’t use an AI tool they don’t understand. If your AI makes predictions, clinicians must know why. Without this, they won’t trust it.

Use explainable AI techniques and make sure your system can show how it reached a decision. Also, help doctors learn how to interpret those insights.

5. Infrastructure is key—and costly

You’ll need a strong backend to make AI work. That includes cloud hosting, secure data storage, and powerful servers for training models.

Tools from AWS, Google Cloud, or Azure can help. But be ready for upfront and ongoing costs. Without this setup, even the best AI won’t scale or stay compliant.

Should You Build or Integrate AI in Healthcare? Let’s Break It Down.

When healthcare providers think about using AI, the first big question is:

Should we build our own AI model or use something that’s already made?

Both paths work — but which one makes sense for you depends on your goals, data, budget, and tech team.

How SyS Creations Helps You Make the Right Choice

We know healthcare. We know tech. And we know how to blend both to make AI work for your clinic — not against it.

Here’s how we help:

- We set up secure cloud infrastructure that follows HIPAA, GDPR, and PIPEDA.

- We understand clinical workflows — so AI fits in naturally.

- We guide you through the legal side and documentation — especially if your tool is a medical device.

- We bring in ML engineers, compliance experts, and healthcare designers — all under one roof.

And most importantly, we help you test the AI in real-life settings — to make sure it’s safe, reliable, and accurate.

That’s how you build trust with doctors, patients, and regulators.